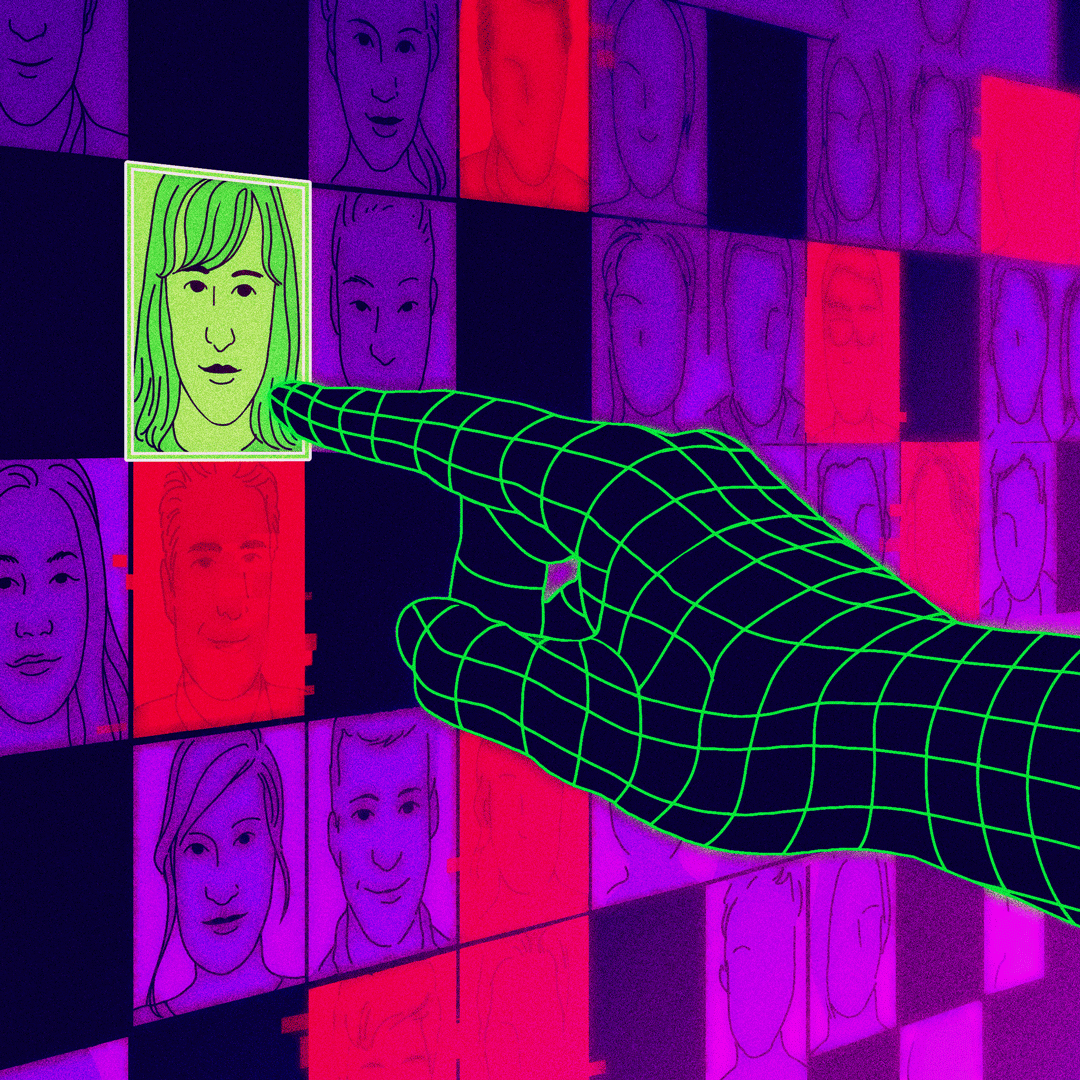

Chatbots are increasingly being used by companies to interview and screen job applicants, often for blue collar jobs. But like other algorithmic hiring tools before them, experts and job applicants worry these tools could be biased.

In early June, Amanda Claypool was looking for a job at a fast-food restaurant in Asheville, North Carolina. But she faced an unexpected and annoying hurdle: glitchy chatbot recruiters.

A few examples: McDonald’s chatbot recruiter “Olivia” cleared Claypool for an in-person interview, but then failed to schedule it because of technical issues. A Wendy’s bot managed to schedule her for an in-person interview but it was for a job she couldn’t do. Then a Hardees chatbot sent her to interview with a store manager who was on leave — hardly a seamless recruiting strategy.

“I showed up at Hardees and they were kind of surprised. The crew operating the restaurant had no idea what to do with me or how to help me,” Claypool, who ultimately took a job elsewhere, told Forbes. “It seemed like a more complicated thing than it had to be,” she said. (McDonald’s and Hardees didn’t respond to a comment request. A Wendy’s spokesperson told Forbes the bot creates “hiring efficiencies,” adding “innovation is our DNA.”)

HR chatbots like the ones Claypool encountered are increasingly being used in industries like healthcare, retail and restaurants to filter out unqualified applicants and schedule interviews with the ones who might be right for the job. McDonalds, Wendy’s, CVS Health and Lowes use Olivia, a chatbot developed by Arizona-based $1.5 billion AI startup Paradox. Other companies like L’Oreal rely on Mya, an AI chatbot developed in San Francisco by a startup of the same name. (Paradox didn’t respond to a comment request about Claypool’s experience.)

Most hiring chatbots are not as advanced or elaborate as contemporary conversational chatbots like ChatGPT. They’ve primarily been used to screen for jobs that have a high-volume of applicants — cashiers, warehouse associates and customer service assistants. They are rudimentary and ask fairly straightforward questions: “Do you know how to use a forklift?” or “Are you able to work weekends?” But as Claypool found, these bots can be buggy — and there isn’t always a human to turn to when something goes wrong. And the clear-cut answers many of the bots require could mean automatic rejection for some qualified candidates who might not answer questions like a large language model wants them to.

That could be a problem for people with disabilities, people who are not proficient in English and older job applicants, experts say. Aaron Konopasky, senior attorney advisor at the U.S. Equal Employment Opportunity Commission (EEOC), fears chatbots like Olivia and Mya may not provide people with disabilities or medical conditions with alternative options for availability or job roles. “If it’s a human being that you’re talking to, there’s a natural opportunity to talk about reasonable accommodations,” he told Forbes. “If the chatbot is too rigid, and the person needs to be able to request some kind of exemption, then the chatbot might not give them the opportunity to do that.”

“It’s sort of like how Netflix recommends movies based on other movies you like.”

Jeremy Schiff, CEO and founder of RecruitBot

Discrimination is another concern. Underlying prejudice in data used to train AI can bake bias and discrimination into the tools in which it’s deployed. “If the chatbot is looking at things like how long it takes you to respond, or whether you’re using correct grammar and complex sentences, that’s where you start worrying about bias coming in,” said Pauline Kim, a employment and labor law professor at Washington University, whose research focuses on the use of AI in hiring tools. But such bias can be tough to detect when companies aren’t transparent about why a potential candidate was rejected.

Recently, government authorities have introduced legislation to monitor and regulate the use of automation in hiring tools. In early July, New York City enacted a new law requiring employers who use automated tools like resume scanners and chatbot interviews to audit their tools for gender and racial bias. In 2020, Illinois passed a law requiring employers who apply AI to analyze video interviews to notify applicants and obtain consent.

Still, for companies looking to trim recruiting costs, AI screening agents seem an obvious option. HR departments are often one of the first places to see staff reductions, said Matthew Scherer, a senior policy counsel for workers’ rights and technology at the Center for Democracy and Technology. “Human resources has always been a cost center for a company, it’s never been a revenue generating thing,” he explained. “Chatbots are a very logical first step to try and take some of the load off of recruiters.”

That’s part of the rationale behind Sense HQ, which provides companies like Sears, Dell and Sony with text messaging-based AI chatbots that help their recruiters wade through thousands of applicants. The company claims it’s already been used by some 10 million job applicants, and co-founder Alex Rosen told Forbes such numbers mean a much bigger pool of viable candidates.

“The reason that we built a chatbot in the first place was to help recruiters talk to a wider swath of candidates than they might be able to do on their own,” he said, adding the obligatory caveat: “We don’t think that the AI should be making the hiring decision on its own. That’s where it gets dangerous. We just don’t think that it’s there yet.”

RecruitBot is bringing AI to bear on hiring by using machine learning to sift through a database of 600 million job applicants scraped from LinkedIn and other job marketplaces — all with the goal of helping companies find job candidates similar to their current employees. “It’s sort of like how Netflix recommends movies based on other movies you like,” CEO and founder Jeremy Schiff told Forbes. But here too bias is an obvious concern; hiring more of the same has its pitfalls. In 2018, Amazon removed its machine learning-based resume tracking system that discriminated against women because its training data was mostly composed of resumes of men.

“Chatbots are a very logical first step to try and take some of the load off of recruiters.”

Matthew Scherer, senior policy counsel at the Center for Democracy and Technology

Urmila Janardan, a policy analyst at Upturn, a nonprofit which researches how technologies impact opportunities for people, noted that some companies have also turned to personality tests to weed out candidates — and the questions to screen candidates may not be related to the job at all. “You might even be potentially rejected from the job because of questions about gratitude and personality,” she said.

For Rick Gned, a part-time painter and writer, a personality quiz was part of a chatbot interview he did for an hourly-wage shelf-stacking job at Australian supermarket, Woolworths. The chatbot, made by AI recruitment firm Sapia AI (formerly known as PredictiveHire), asked him to provide 50- to 150-word answers for five questions and then analyzed his responses, looking for traits and skills that match the recruiters’ preferences. Concluding that Gned “deals well with change,” and is “more focused on the big picture that causes him to look over details,” it advanced him to the next round interview. While Sapia AI does not require applicants to respond to questions under a time limit, the system measures sentence structure, readability and complexity of words used in the text responses, Sapia AI CEO and cofounder Barb Hyman said in an email.

Gned found the whole thing dehumanizing and worrisome, he told Forbes. “I’m in a demographic where it doesn’t affect me, but I’m worried for people who are minorities, who predominantly make up the lower-income labor market.”

For one job applicant, who requested anonymity to speak freely, chatting with a bot had at least one positive. When filling hundreds of job applications, he often never hears back, but the bot at least assured him that his application was received. “It did feel like a morale boost in so many ways,” he said. “But if I had to do this (text with a chatbot) with every job I applied to, it would be a pain in the ass.”